Student feedback prediction using Machine Learning

₹8,000.00 Exc Tax

Student feedback prediction using Machine Learning

100 in stock

Description

ABSTRACT

Advances in natural language processing (NLP) and educational technology, as well as the availability of unprecedented amounts of educationally-relevant text and speech data, have led to an increasing interest in using NLP to address the needs of teachers and students. Educational applications differ in many ways, however, from the types of applications for which NLP systems are typically developed. This paper will organize and give an overview of research in this area, focusing on opportunities as well as challenges.

INTRODUCTION

Natural language processing (NLP) has over a 50 year history as a scientific discipline, with applications to education appearing as early as the 1960s. Initial work focused on automatically scoring student texts as well as on developing text-based dialogue tutoring systems, while later work also included spoken language technologies. While research in these traditional application areas continues to progress, recent phenomena such as big-data, mobile technologies, social media and MOOCs have resulted in the creation of many new research opportunities and challenges. Commercial applications already include high-stakes assessments of text and speech, writing assistants, and online instructional environments, with companies increasingly reaching out to the research community.1 as shown in Figure 1, NLP can enhance educational technology in several ways. As an example of the first role, NLP is being used to automate the scoring of student texts with respect to linguistic dimensions such as grammatical correctness or organizational structure. As an example of the second role, dialogue technologies are being used to achieve the benefits of human one-on-one tutoring – particularly in STEM domains – in a cost-effective and scalable manner. Examples of the third role include processing text from the web in order to personalize instructional materials to the interests of individual students, automate the generation of test questions for teachers, or (semi-)automate the authoring of an educational technology system. Given the increasing interest in applying natural language processing to education, communities have emerged that now sponsor regular meetings and shared tasks. Beginning in the 1990s, a series of tutorial dialogue systems workshops began to span the Artificial Intelligence and Education and the Natural Language Processing communities, including a AAAI Fall Symposium2. Since 2003, ten workshops on the ‘Innovative Use of NLP for Building Educational Applications’3 have been held at the annual conference of the North American Chapter of the Association for Computational Linguistics. In 2006, the ‘Speech and Language Technology in Education’4 special interest group of the International Speech Communication Association was formed and has since organized six workshops5; members have also organized related special sessions at Interspeech conferences. Recent shared academic tasks have included student response analysis6 (Dzikovska et al. 2013), grammatical error detection7 (Ng et al. 2014), and prediction of MOOC attrition from discussion forums8 (Rose and Siemens 2014). There have also been highly visible competitions sponsored by the Hewlett Foundation in the areas of essay9 and short answer response10 scoring.

Technological innovation is first motivated by and later addresses societal need. Technological innovation similarly is first informed by and later contributes to educationally-relevant theories and data. Starting at the upper right of the figure, a research problem in the area of NLP for educational applications is usually inspired by a real-world student or teacher need. For example, given the enormous student/instructor ratio in MOOCs, it is difficult for an instructor to read all the posts in a MOOC’s discussion forums; can NLP instead identify the posts that require an instructor’s intervention? Next, progressing to the bottom of the figure, constraints on solutions to the problem are formulated by taking into account relevant theory or data driven findings from the literature. For example, even before MOOCs, there was a pedagogical literature regarding instructor intervention. Finally, progressing to the upper left of the figure, an NLP-based technology is designed, implemented, and evaluated. Based on an error analysis, the cycle likely iterates. For example, an intervention system developed for a science MOOC might need revision to meet the needs of a humanities instructor. Because “off the shelf” NLP approaches often face challenges when applied to educational problems and data, innovative NLP research typically results from this lifecycle. Some standard challenges when applying NLP to education are shown in the middle of the figure. First, because many NLP tools have been trained on professionally written texts such as the Wall Street Journal, they often do not perform well when applied to texts written by students. Second, when predicting an educationally-related dependent variable, the independent variables often need to be restricted to those that are pedagogically-meaningful. For example, although word count can very accurately predict many types of essay scores, word count is typically not part of a human’s grading rubric and would thus not be useful to mention in student feedback. Finally, since many NLP algorithms are embedded in interactive applications, technical solutions often need to be real-time even at MOOC scale.

In addition to building computer tutors, other uses of dialogue technology for teaching have been explored. Researchers have developed systems that play the role of student peers rather than expert tutors (Kersey et al. 2009). There has also been interest in going beyond one-on-one computer-student conversational interaction, by not only enabling human-machine but also improving human-human communication. Dialogue agents have been used to facilitate a student’s dialogue with other human students as in computer supported collaborated learning (Kumar et al. 2007), or to enable students to observe the training dialogues of other students and/or virtual agents (Piwek et al. 2007). My own research has focused on the design and evaluation of a spoken tutorial dialogue system for conceptual physics, and the development of enabling data-driven technologies. Research from my group has shown how to enhance the effectiveness of tutorial dialogue systems that interact with students for hours rather than minutes by developing and exploiting novel discourse analysis methods (Rotaru and Litman 2009). We also used what students said and how they said it to detect pedagogically relevant user affective states which in turn triggered system adaptations. The models for detecting student states and for associating adaptive system strategies with such states were learned from tutoring dialogue corpora using new data-driven methods (Forbes-Riley and Litman 2011). To support the use of reinforcement learning as one of our data-driven techniques, we developed probabilistic user simulation models for our less goal-oriented tutoring domain (Ai and Litman 2011) and tailored the use of reinforcement learning with its differing state and reward representations to optimize the choice of pedagogical tutor behaviors (Chi et al. 2011).

EXISTING SYSTEM

Online computer science courses can be massive with numbers ranging from thousands to even millions of students. Though technology has increased our ability to provide content to students at scale, assessing and providing feedback (both for final work and partial solutions) remains difficult. Currently, giving personalized feedback, a staple of quality education, is costly for small, in-person classrooms and prohibitively expensive for massive classes. Autonomously providing feedback is therefore a central challenge for at scale computer science education.

DISADVANTAGE

- This data provides the training set from which we can learn a shared representation for programs.

- To evaluate our program embeddings we test our ability to amplify teacher feedback.

PROPOSED SYSTEM

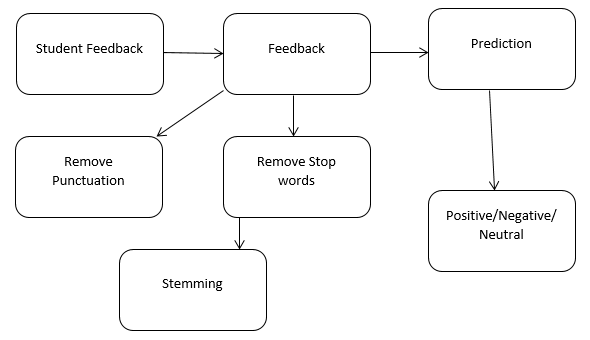

In proposed methodology, we are collecting student feedback about class room, exam and lap facilities. After that we are predicting the dataset using natural language processing. In nlp, we are having the nltk tool kit. So we no need to use the training data for that. NLP segregate like different group of feedback results. After predicting the data, we are analyzing positive, negative and neutral. That results we are making like 3 groups what are the department having positive and negative things from the dataset. Student feedback is collected in form of responses to questions in a single sentence; it requires sentiment analysis in sentence level. In sentiment classification, machine learning methods have been used to classify each question as positive or negative. Testing of data is done based on training model which is classified using supervised learning algorithm. Evaluation of the total responses for every question and determine the polarity of feedback received in context of the question. The evaluation of response is purely data driven and hence simple while the classification of questions in form of natural language texts involves sentiment analysis.

ADVANTAGES

- It is crucial to understand the patterns generated by the data like student feedback to effectively improve the performance of the institution and to create plans to enhance institutions’ teaching and learning experience.

- Opinion Mining technique for classifying the students’ feedback obtained during evaluation survey that is conducted every semester to know the feedback of students with respect to various features of teaching and learning such as module, teaching, assessments, etc.

- Student’s feedback improves communication between the lecturer and the students, allowing the lecturer to have an overall summary of the student’s opinion.

SYSTEM ARCHITECTURE

HARDWARE AND SOFTWARE REQUIREMENTS

HARDWARE

- 4GB or 8GB RAM

- Windows 10 32 or 64 bit

SOFTWARE

- Anaconda3

- Python

- Jupyter Notebook

DATASET COLLECTION

In this phase, the data is prepared for the analysis purpose which contains relevant information. Pre-processing and cleaning of data are one of the most important tasks that must be one before dataset can be used for machine learning. The real-world data is noisy, incomplete and inconsistent. So, it is required to be cleaned.

PRE-PROCESSING

Raw feedback scraped from twitter generally result in a noisy dataset. This is due to the casual Nature of people’s usage of social media. Feedback has certain special characteristics such as Feedback, emoticons, user mentions, etc. which have to be suitably extracted. Therefore, raw twitter data has to be normalized to create a dataset which can be easily learned by various classifiers. We have applied an extensive number of pre-processing steps to standardize the dataset and reduce its size. We first do some general pre-processing on feedback which is as follows.

- Convert the tweet to lower case.

- Replace 2 or more dots (.) with space.

- Strip spaces and quotes (” and ’) from the ends of tweet.

- Replace 2 or more spaces with a single space.

EXTRACTION OF FEATURE SET

In this phase, the cleaned data that is obtained from the data preprocessing phase is used to obtain the feature sets or training data of the student feedback. When we train to a classifier by taking maximum numbers of features, that contains all the irrelevant or redundant features can negatively affect the algorithm performance. So, it is required to carefully select the number and types of features that will be used to train the machine learning algorithms. Various feature selection techniques can be used for selecting features in the feature set/training data. Feature set or training data can be prepared from the cleaned data by using any of the available techniques like bag of words, -gram, N-gram, POS, TOS tagging etc. The training data can also be prepared by providing those labels and then divides it into two classes like positive class and negative class. The feature sets and training set that has obtained by using any of the above methods will be used for the implementation of machine learning algorithms.

TESTING ON DATASET

Testing of data is done based on training model which is classified using supervised learning algorithm. Evaluation of the total responses for every question and determine the polarity of feedback received in context of the question. The evaluation of response is purely data driven and hence simple while the classification of questions in form of natural language texts involves sentiment analysis. To test the model, collected data from students who posted their views in online discussion forums.

CONCLUSION

In this paper we have presented a method for finding simultaneous embedding’s of preconditions and post conditions into points in shared Euclidean space where a program can be viewed as a linear mapping between these points. These embeddings are predictive of the function of a program, and as we have shown, can be applied to the tasks of propagating teacher feedback. The courses we evaluate our model on are compelling case studies for different reasons.

REFERENCES

1. Khan, Khairullah, “Mining opinion componentsfrom unstructured reviews:A review,” Journal of King Saud University – Computer and InformationSciences , Vol. 26, 2014.

2. Jyotsna Talreja Wassan, “Discovering Big Data Modelling for Educational World”, IETC Procedia – Social and Behavioral Sciences, pp:642 – 649, 2015

3. Dirk T. Tempelaar, “In search for the most informative data for feedback generation: Learning analytics in a data-rich context”, :Journal of Computers in Human Behavior, Vol 47, pp: 157– 167, 2015. 4. Beth Dietz-Uhler and Janet E. Hurn, “Using Learning Analytics to Predict (and Improve) Student Success: A Faculty Perspective”, Journal of Interactive Online Learning, Volume 12, Number 1, 2013.

5. Marie Bienkowski, Mingyu Feng, “Enhancing Teaching and Learning Through Educational Data Mining and Learning Analytics”, Department of Education, Office of Educational Technology: October 2012.

6. Pang, B. and Lillian L. s.l, “Opinion mining and sentiment analysis : Foundations and trends in information retrieval”, Vol. 2, 2008.

7. Walaa Medhat, Ahmed Hassan, Hoda Korashy, “Sentiment analysis algorithms and applications: A survey”, Ain Shams Engineering Journal, Vol.5, 1093–1113, 2014.

8. Kumar Ravi, Vadlamani Ravi, “A survey on opinion mining and sentiment analysis: Tasks, approaches and applications”, Published in Knowledge-Based Systems Vol. 89 , 14–46, 2015.

Reviews

There are no reviews yet.